Generative AI, a subset of artificial intelligence, excels in creating content, such as text, images, or code, autonomously. When integrated with Integrated Development Environments (IDEs), generative AI becomes a powerful tool for developers. It can assist in code generation, offering suggestions, completing repetitive tasks, and even identifying potential errors. This synergy enhances the productivity and creativity of developers, as they can focus on higher-level aspects of software development while the AI takes care of routine and time-consuming tasks. Moreover, generative AI in IDEs fosters collaborative coding environments by providing real-time code suggestions and can adapt to different programming languages and coding styles, ultimately streamlining the software development process.

Certainly, that’s the view of Generative AI itself, as that opening paragraph is a cut and paste straight from ChatGPT. We asked it the question “Write me a short paragraph about generative AI and how it can integrate with IDEs”. The rest of this article is written by humans… and we want to focus on some of the challenges faced by developers who want to engage in this exciting new world.

What different AI models are available?

We looked at some of the popular models including

- GitHib Copilot

- Overflow AI

- JetBrains IDE

A model with many users, GitHub Copilot can be integrated with many different IDEs, such as visual studio code. This allows for natural language prompts in the form of code comments to generate sections of code, or entire functions in some cases. GitHub Copilot is powered by the OpenAI Codex, which is a modified, production version of the GPT3, a language model using deep-learning to produce human-like text.

Another popular tool is the Overflow AI, a tool from Stack Overflow that uses the stack overflow database to respond to natural language prompts. This allows the AI to search through the Stack Overflow database to find the best suited information that can solve the problem. This also links the posts as citations allowing a user to see other comments and other similar posts that may help. The Overflow AI uses the Microsoft Azure OpenAI API’s but also the large dataset stored by Stack Overflow to allow responses to use this data.

Is data transfer and storage secure?

It was important to us to understand how data is transferred from the IDE and stored during processing. Could it lead to increased vulnerabilities and potential security risks? As a company with a strong ‘secure by design’ ethos built into our ISO 27001 management system, this was a key consideration.

It was important to us to understand how data is transferred from the IDE and stored during processing. Could it lead to increased vulnerabilities and potential security risks? As a company with a strong ‘secure by design’ ethos built into our ISO 27001 management system, this was a key consideration.

For a business account using the GitHub Code Pilot, the prompt data and suggestions are stored for the duration i.e. throughout the time that the prompt is waiting to be successfully processed. Once successful, the prompt data is deleted from the GitHub data-stores, but worth noting that user engagement data can be stored for up to 24 months (GitHub Copilot Trust Center – GitHub Resources).

Still this is better than the Microsoft Azure OpenAI model, where prompt data and suggestions are stored for the duration of the search! Microsoft also stores user engagement information, which potentially opens many more possible vulnerabilities i.e. where data can be accessed without permission. The transfer of data (prompts and suggestions) is protected by TLS (Transport Layer Security) protocols that are used to ensure packets are unreadable but, in the event these are configured incorrectly, data could be intercepted and read allowing 3rd party groups to eavesdrop on communications between a developer’s IDE and the AI.

Other potential risks to acknowledge?

Demand

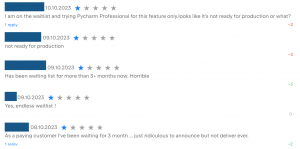

Generative AI has enjoyed an explosion in popularity, which in turn puts pressure on the providers of AI tools as they attempt to cope with demand. ChatGPT has addressed the problem with a premium (i.e. paid for) service, and a free service, which is not always available. JetBrains has not really addressed the issue at all with their AI Assistant, which is a shame as we use many JetBrains products and felt they were missing a trick. JetBrains AI Assistant can be fully integrated with the range of JetBrains IDEs, but we didn’t get far as alongside other users we were placed on a waiting list before being granted use. This highlights a problem: the unpredictable waiting time for accessing the service makes widespread adoption of the software very difficult.

Bias

Bias is a complex subject. Quite rightly most concerns cover bias through data which reflect flawed human decisions or social inequities. That is a blog topic in its own right but here we are focusing on bias towards vulnerable code. When the code the AI learns from contains vulnerabilities, then what it produces will tend towards being insecure itself. From a secure by design perspective, this causes the most concern. How does this happen?

Bias is a complex subject. Quite rightly most concerns cover bias through data which reflect flawed human decisions or social inequities. That is a blog topic in its own right but here we are focusing on bias towards vulnerable code. When the code the AI learns from contains vulnerabilities, then what it produces will tend towards being insecure itself. From a secure by design perspective, this causes the most concern. How does this happen?

Models are trained using many lines of open-source code that could potentially cause repeated vulnerabilities with generated suggestions that could be exploited. This was recently researched in the paper An Empirical Cybersecurity Evaluation of GitHub Copilot’s Code Contributions where the security of produced code by the GitHub copilot was tested. This showed how over a range of test cases the copilot was seen to produce vulnerable code.

Another consideration is the programmer’s ability to create prompts that are detailed and describe the problem correctly. The prompts must be written in a concise manner to ensure that the suggestions are able to meet the requirements, otherwise incorrect code may be suggested and inserted. Incorrect code would require a lot of manual editing and debugging to create a workable solution.

Best practices such as software testing and code review will help negate the risk against injecting malicious data into the system. However, given the security implication and added time management issues, it does raise the question – why use it at all if you know what you are doing?

So…should AI in IDEs be used?

In a nutshell:

- Currently our research showed a trend of repeated problems often hindering development.

- Accessing the software has unacceptably long wait times

- The generated response could produce a wide range of vulnerabilities or bugs.

These problems will likely be fixed in the future, allowing users to access software immediately and using a more refined AI model that produces higher quality code that has fewer bugs and possible security vulnerabilities. This means we will continue to look into the possibilities of an AI paired programmer as without the current faults these tools could be implemented and see great success.

But in our opinion successful use will still largely depend upon the expertise and knowledge of the user. The role of the Software Developer is safe for now!